The Crisis in Cancer Research and Treatment

The First Cell; and the Human Costs of Pursuing Cancer to the Last, Azra Raza, 2019

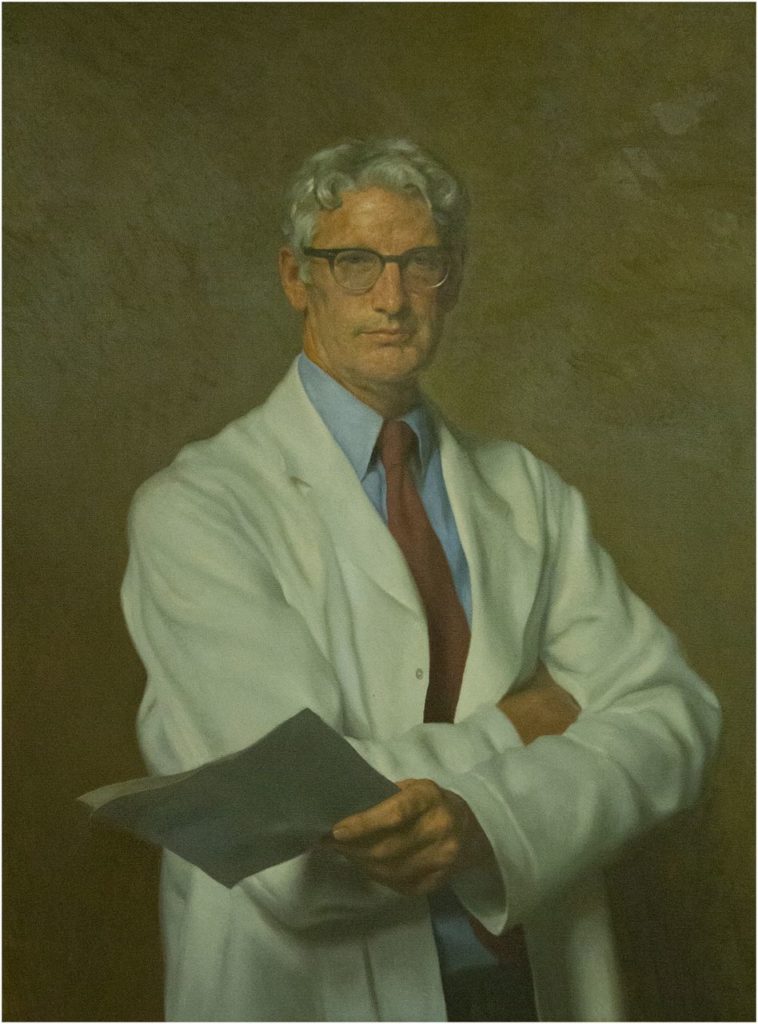

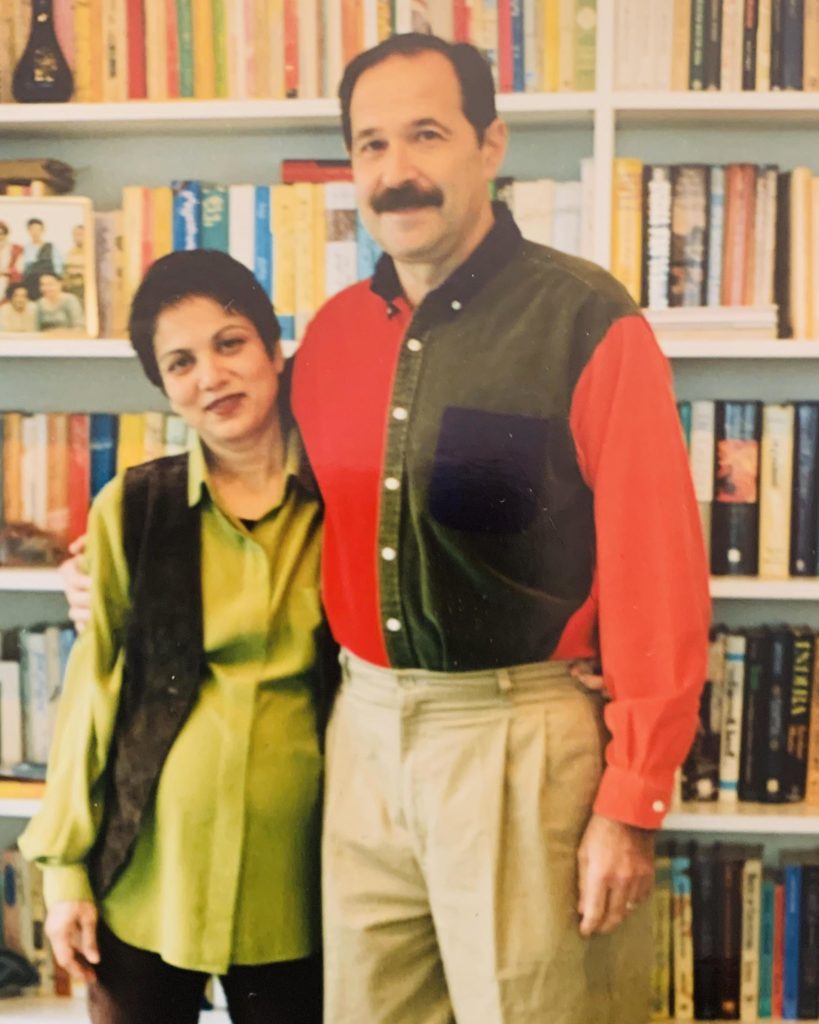

Dr. Azra Raza and Dr. Harvey Priesler

This book is intended as a wake up call for all of us that may have assumed cancer research was making progress. The articulate Dr. Raza has spent her career helplessly watching patient after patient die with only fifty year old therapies available (slash, poison, burn), including her own husband Harvey, himself a specialist in the very cancer that killed him. The book combines introducing us to some of her special and unique patients with a description of the complexity of cancer that is impenetrable reading for us laymen, to a plea for rebooting the entire field of cancer research and treatment. It is best to present the very articulate argument for rebooting our approach in Dr. Raza’s own words.

Over the twelve-year period from 2002 to 2014, seventy-two new anti cancer drugs gained FDA approval; they prolonged survival by 2.1 months. Of eighty-six cancer therapies for solid tumors approved between 2006 and 2017, the median gain in overall survival was 2.45 months…A study published in the British Medical Journal showed that thirty-nine of sixty-eight cancer drugs approved by the European regulators between 2009 and 2013 showed no improvement in survival or quality of life over existing treatment, placebo, or in combination with other agents.

Identifying predictive markers that allow for individualizing therapy by matching drugs to patients remains the treasured yet elusive holy grail of oncology… More than 90 percent of trials ongoing around the county make almost zero attempt to save tumor samples for post hoc examination to identify predictive biomarkers…Who is pushing this short-term agenda driven by the singular goal of getting a drug approved with alacrity as long as it meets the bar of improving survival by mere weeks in a few patients?

A pioneering and revolutionary paper was published in 1976 by Peter Nowell. His clairvoyance about cancer being an evolving entity has been largely ignored. From “The Clonal Evolution of Tumor Cell Populations”:

Peter NowellTumor cell populations are apparently more genetically unstable than normal cells, perhaps from activation of specific gene loci in the neoplasm, continued presence of carcinogen, or even nutritional deficiencies within the tumor. The acquired genetic instability and associated selection process, most readily recognized cytogenetically, results in advanced human malignancies being highly individual karyotypically and biologically. Hence, each patient’s cancer may require individual specific therapy, and even may be thwarted by emergence of a genetically variant subline resistant to the treatment. More research should be direct toward understanding and controlling the evolutionary process in tumors before it reached the late stage usually seen in clinical cancer.

…in 2009, Gina Kolata reported in her New York Times column the jaw-dropping statistics that despite the infusion of more than $100 billion into cancer research, death rates for cancer had dropped by only 5 percent between 1950 and 2005 when adjusted for size and age of the the population. The war on cancer was not going well.

A review of where the research funds go reveals the inherent biases perpetuated by the peer-review process as detailed by Clifton Leaf in his eye-opening book, The Truth in Small Doses: Why We’re losing the War on Cancer and How to Win It. Enormous sums of money from the government continue to fund the same institutions and universities over and over…The saddest part is that upon serious examination of what is published, 70 percent of the basic research is not reproducible and 95 percent of clinical trials are unmitigated disasters.

A recent study titled, “Death or Debt? National Estimates of Financial Toxicity in Persons with Newly-Diagnosed Cancer,” published in the October 2018 issue of the American Journal of Medicine, tabulated the chilling economic burden borne by patients with newly diagnosed cancer. Using the Health and Retirement Study Data, this longitudinal study identified 9.5 million estimated new cases of cancer between 1998 and 2012 in the United States. Two years from diagnosis, 42.5 percent of individuals had depleted their entire life’s assets, and 38.2 percent incurred longer-term insolvency, cancer costs being highest during treatment and in the final months of life. The most vulnerable groups were those with worsening cancer, older age, females, retired individuals, and those suffering from comorbidities like diabetes, hypertension, lung and heart diseases, belonging of a lower socioeconomic group, or on Medicaid.

The unfortunate reality is that not a single marker for response is examined in the majority of clinical trials being conducted even today. Why? Because this is how the system has evolved. The pharmaceutical industry sponsoring the trials is only interested in reaching a statistical end point to get their agent approved. The companies have usually invested almost a billion dollars already to bring an agent to the point of a phase 3 trial. It would add a staggering amount of money to their stretched budgets to perform such detailed biomarker analysis. I suggest saving all the money being squandered on testing the agents in pretherapy, preclinical models of cell lines, and mouse models and instead investing the resources in biomarker analysis. Some bold changes are needed at every level. To harness rapidly evolving fields like imaging, nanotechnology, proteomics, immunology, artificial intelligence, and bioinformatics, and focus them on serving the cause of cancer patient, we must insist on collaboration between government institutions (NCI, FDA, CDC, DOD), American Society of Clinical Oncology, American Society of Hematology, funding agencies, academia, philanthropy, and industry.

Contrast the putative scientific gold standard of a reproducible animal model with the known fact that every patient’s cancer is a unique disease, and within each patient, cancer cells that settle in different sites are unique. When a malignant cell divides in two, it can produce daughter cells with the same or radically different characteristics because during the process of DNA replication, fresh copying errors constantly occur. Even if two cancer cells have identical genetics, much like identical twins, their behavior can differ depending on genes expressed or silenced according to the demands of a thousand variables, such as the microenvironment where they land, the blood supply available to them, and the local reaction of immune cells. The resulting expansive variety of tumor cells that exist withing tumors are unique within unique sites of the body. Multiply this complexity further by adding the host’s immune response to each new clone and you get a confounding, perplexing, impenetrable situation in perpetual flux.

So what is the solution? The first step is to descend from our high horses and humbly admit that cancer is far too complex a problem to be solved with the simplistic preclinical testing platforms we have devised to develop therapies. Little has happened in the past fifty years, and little will happen in another fifty if we insist on the same old same old. The only way to deal with the cancer problem in the fastest, cheapest, and, above all, most universally applicable and compassionate way is to shift our focus away from exclusively developing treatments for end-stage disease, and concentrate on diagnosing cancer at its inception and developing the science to prevent its further expansion. From chasing after the last cell to identifying the footprints of the first.

The heyday of reductionism, looking for one culprit gene at a time and searching for the one magic bullet, is over. The era of big data, cloud computing, artificial intelligence, and wearable sensors has arrived. The study of cancer is evolving into a data-driven, quantitative science. Merging information obtained from liquid biopsies (RNA, DNA, proteomics, exosome studies, CTC), with histopathology, radiologic, and scanning techniques, aided by rapid machine learning, image reconstruction, intelligent software, and microfluidics can–and will–revolutionize the way we diagnose and prevent rather than treat cancer in the future. The ideal strategy will emerge from harnessing cutting-edge technology for a multidisciplinary systems biology approach through a consilience of scientists with expertise in molecular genetics, imaging, chemistry, physics, engineering, mathematics, and computer science.

Research is also ongoing in all these areas funded by the National Institute of Health, but the investment remains paltry compared to funding provided for studies conducted on cell lines and animal models. Through redirection of intellectual and financial resources from the same old grant proposals to grant incentives for early detection using actual human samples, and by posing exciting challenges to competitive scientists, progress will be accelerated dramatically. The piece that is missing from the equation is an admission of failure of current strategies and a willingness to take a 180-degree turn to start all over again.

Antonio Fojo of the NCI extrapolating the implications of one trial:

“In the lung cancer trial, overall survival improved by just 1.2 months on average. The cost of an extra 1.2 months of survival? About $80,000. If we allow a survival advantage of 1.2 months to be worth $80,000, and by extrapolation survival of one year to be valued at $800,000, we would need $440 billion annually–an amount nearly 100 times budget of the National Cancer Institute–to extend by one year the life of the 550,000 Americans who die of cancer annually. And no one would be cured.“

This is how complex cancer is. It is pure arrogance to think the problem can be solved by a few molecular biologists if they put their minds to it. Cancer is a perfidious, treacherous, evolving, shifting, moving target, far too impenetrable to be deconstructed systematically, far too dense to lend itself in all its plurality to recapitulation in lab dishes or animals.